Introduction: Why Platform Engineering Needs Golden Path Kubernetes Workflows?

Today, deploying an application in Kubernetes typically requires creating and managing a variety of interdependent resources like deployments, services, secrets, configmaps, etc. Platform Engineers are responsible for ensuring that these resources are created and configured correctly and work seamlessly together. These orchestration tasks are often complex and error-prone, leading to challenges like dependency management and operational overhead from custom APIs and controllers.

Why Kro and Platform Engineering Matter

If you are a DevOps engineer, platform engineer, or developer working with Kubernetes, this article will provide you with:

- A clear understanding of the common challenges in Kubernetes resource management.

- Insight into how kro simplifies these challenges by abstracting resource dependencies and lifecycle management.

- A detailed explanation of kro’s architecture and workflow.

- A practical example of defining and deploying applications using kro.

This knowledge will help you leverage kro to improve your Kubernetes deployments, reduce operational overhead, and enhance developer experience in a platform engineering way. Before looking at Kro, let's understand the concept of Platform Engineering and Internal Developer Platforms (IDP).

Platform Engineering and Internal Developer Platforms (IDP)

Platform engineering is a methodology based on DevOps principles that aims at improving developer experiences and self-service within a safe, controlled environment in order to improve each development team's security, compliance, costs, and time-to-business value. It is a change in mindset that is built on products as well as a collection of tools that support it. The Internal Developer Portal (IDP), a centralized interface that enables developers to find, provision, and manage resources using created templates and golden paths, is one of the main enablers of this self-service ability. Platform engineering significantly improves developer productivity and speeds up feature delivery by reducing heavy load and context switching through the installation of self-service tools, standardized workflows, and automated infrastructure through such portals.

The development of golden paths in Kubernetes—well-supported workflows or templates that ensure best practices and standards for service deployment and management—is an essential aspect of this approach. In addition to making it easier for developers to get started and maintain efficiency as they scale projects or bring on new team members, these golden paths also help ensure consistency, security, and compliance across teams. The end result is an environment that is more developer-friendly, reliable, and efficient, allowing teams to innovate with assurance and speed.

The Real Kubernetes Challenges: Dependency Hell and CRD Sprawl

During the process of provisioning application environments Platform Engineers usually encounter two problems. They are:

-

Dependency Hell: Many Kubernetes resources depend on each other for example, a deployment might require a configmap to be present to start or a service must be created to expose pods. Hence managing the order of creation and ensuring that updates to one resource are reflected in others is a manual and error-prone process. If dependencies are not handled correctly, it can lead to application failures, configuration drift, or even outages, as resources may not be available when needed or may be misconfigured.

-

Increase in custom APIs and controllers: In order to simplify complex deployments, platform teams often create custom resource definitions and write controllers to automate the management of groups of resources. While this can make deployments easier for end users, it introduces significant maintenance overhead. Each new Kubernetes CRD and controller adds to the complexity of the system, requiring ongoing updates, testing, and documentation. Over time, clusters can become cluttered with redundant or obsolete CRDs, making them harder to manage and troubleshoot.

These challenges make it difficult to manage Kubernetes efficiently and reliably, especially on large and multi-cloud clusters. In this article we will look at various open-source tools and solutions which solves this problem and will have a detailed understanding on Kro (Kubernetes Resource Operator).

Kro

How Kro Works: A Kubernetes Resource Operator Blueprint

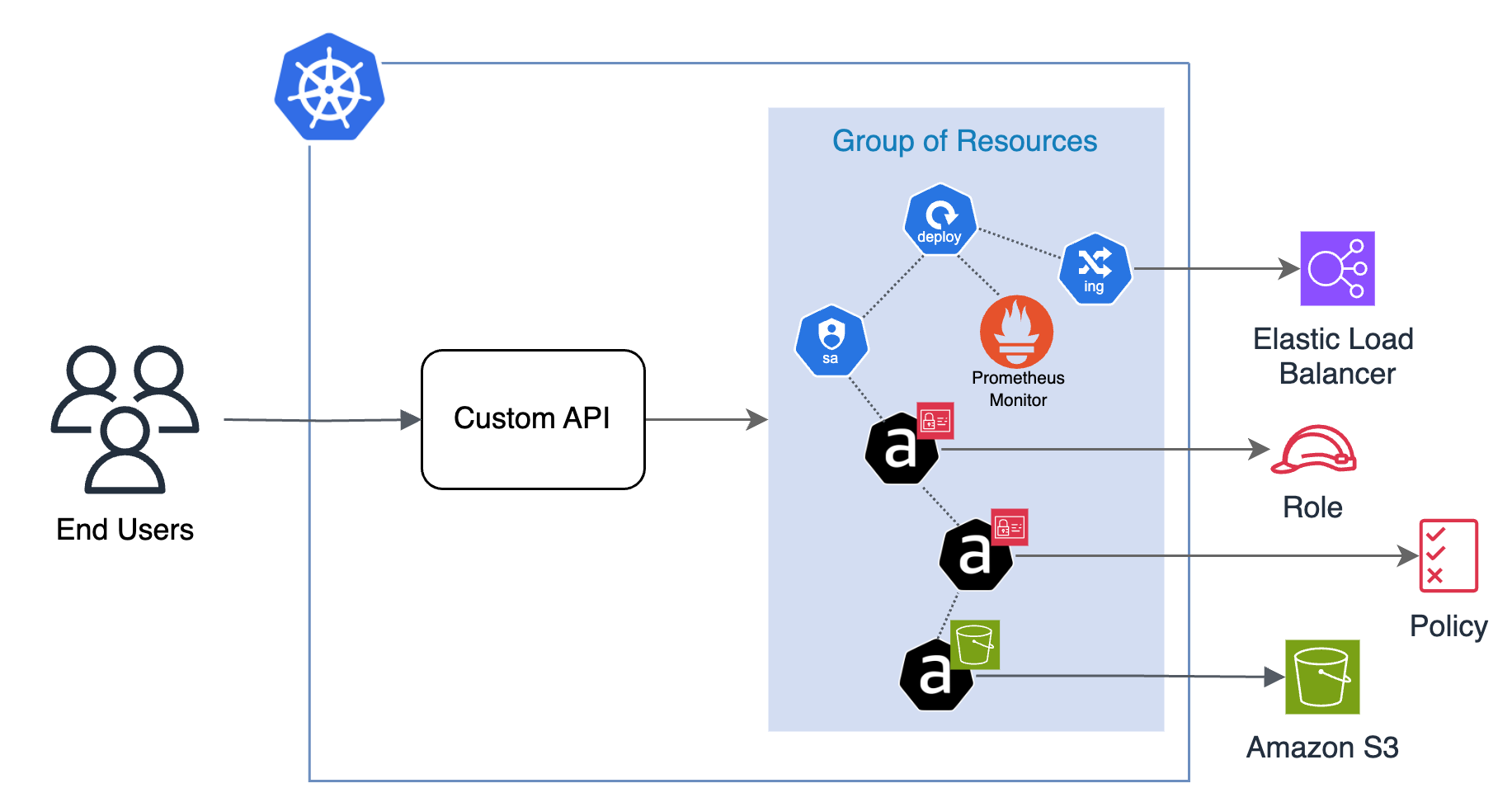

Kro is made up of several key components that work together to let you define, deploy, and manage groups of Kubernetes resources as a single, reusable unit. At the heart of kro is the ResourceGraphDefinition, a custom resource that acts as a blueprint for your grouped resources. This definition specifies which resources to create (like Deployments, Services, or even cloud resources), how they depend on each other, what configuration options users can set, and any default values or validation rules

When we apply a ResourceGraphDefination Kro generates a new CRD based on the blueprint. This CRD exposes a new API in your cluster, allowing users to create instances of your custom resource group just like any other Kubernetes object. Also for every CRD it deploys a dedicated controller. This controller manages the entire lifecycle of the blueprint resources like creating, updating, deleting, etc.

After the ResourceGraphDefinition is deployed by the DevOps/ SRE Team. The template is then provided to the Developer Teams where they can create the application stack with their custom values. These templates are known as Instances.

Giving a simple analogy of Helm. ResourceGraphDefinition is the Helm Chart/Template and the Instances are the values.yaml which are defined by the user for custom configuration.

Kro Architecture: Automating Kubernetes Lifecycle Management

Example: Building Golden Path Workflows with Kro

Defining a Custom ResourceGraph in Kro

apiVersion: kro.run/v1alpha1

kind: ResourceGraphDefinition

metadata:

name: my-application

spec:

schema:

apiVersion: v1alpha1

kind: Application

spec:

name: string | default=my-awesome-app

image: string | default=nginx

ingress:

enabled: boolean | default=false

status:

deploymentConditions: ${deployment.status.conditions}

availableReplicas: ${deployment.status.availableReplicas}

resources:

- id: deployment

template:

apiVersion: apps/v1

kind: Deployment

metadata:

name: ${schema.spec.name}

spec:

replicas: 3

selector:

matchLabels:

app: ${schema.spec.name}

template:

metadata:

labels:

app: ${schema.spec.name}

spec:

containers:

- name: ${schema.spec.name}

image: ${schema.spec.image}

ports:

- containerPort: 80

- id: service

template:

apiVersion: v1

kind: Service

metadata:

name: ${schema.spec.name}-service

spec:

selector: ${deployment.spec.selector.matchLabels}

ports:

- protocol: TCP

port: 80

targetPort: 80

- id: ingress

includeWhen:

- ${schema.spec.ingress.enabled}

template:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ${schema.spec.name}-ingress

spec:

rules:

- http:

paths:

- path: '/'

pathType: Prefix

backend:

service:

name: ${service.metadata.name}

port:

number: 80

yamlExplaining Kro’s ResourceGraphDefinition Structure

The ResourceGraphDefination consists of two main sections

- Schema : This is the configuration which helps the KRO to create CRD of our application. Similar to what any resource in Kubernetes have a spec and a status. Here we define our custom values for them. It also contains the type and the default values of the resources.

- Templates : This is the section where we define various resources to be deployed via CRD. The custom values for the resources are taken by the spec inside the schema.

Multi-Team Deployments with Kro Instances

apiVersion: kro.run/v1alpha1

kind: Application

metadata:

name: team-a-app

namespace: test

spec:

name: team-a-app

image: nginx:alpine

ingress:

enabled: true

---

apiVersion: kro.run/v1alpha1

kind: Application

metadata:

name: team-b-app

namespace: test

spec:

name: team-b-app

image: nginx

ingress:

enabled: false

yamlHow Instances Enable Self-Service Developer Platforms

The Instance resource is a simple definition of our application which is to be deployed with custom values. Also have a look at the Kind it says Application which we defined in the ResourceGraphDefination. Here we saw the multi-team (instance = team) use-case of KRO.

What’s Next from Kubernetes Infrastructure Automation to Multi-Cloud Kro?

As kro becomes more mature, expect deeper integration with cloud provider resources and more advanced features for lifecycle management and observability. Platform teams can explore kro to build robust internal developer platforms that scale across multi-cloud environments. Imagine Platform teams can define a single API that deploys an application along with its required cloud resources example: AWS S3 Buckets, GCP CloudSQL, etc. Also organizations can standardize and reuse deployment templates that work seamlessly across multiple cloud providers.

Conclusion: DevOps vs Platform Engineering in the Age of Kro

Although Kubernetes makes container orchestration easier, it has drawbacks of its own, particularly when it comes to maintaining clean, scalable abstractions and managing complex, interdependent resources. Platform engineering provides a means of managing this complexity through its focus on developer self-service and golden paths. An excellent example of how this strategy can be used in real-world scenarios is Kro (Kubernetes Resource Orchestrator). Kro lowers the growth of CRDs, gets rid of dependency hell, and makes Kubernetes more developer-friendly by converting repeating application stacks into declarative, reusable APIs. The next wave of internal developer platforms streamlined, scalable, and speed-built will be greatly influenced by tools like Kro as the ecosystem grows.