AI jobs often run for long periods on expensive hardware like GPUs. When a job fails halfway, you don't just lose progress—you waste valuable time and costly resources. Workflow orchestration solves this by providing fault tolerance, letting you break complex tasks into manageable steps, set dependencies, and recover from failures. This is especially critical in machine learning, where robust, efficient execution is paramount.

In this post, we’ll share how we migrated the core service for one of our client's AI red teaming platform from Celery to a modern, cloud-native architecture using Argo Workflows and NATS. For context, AI red teaming involves simulating attacks to find vulnerabilities in AI systems—a process that involves lengthy, resource-intensive jobs. Our journey not only made the job execution far more reliable but also slashed our compute costs by a factor of 11.

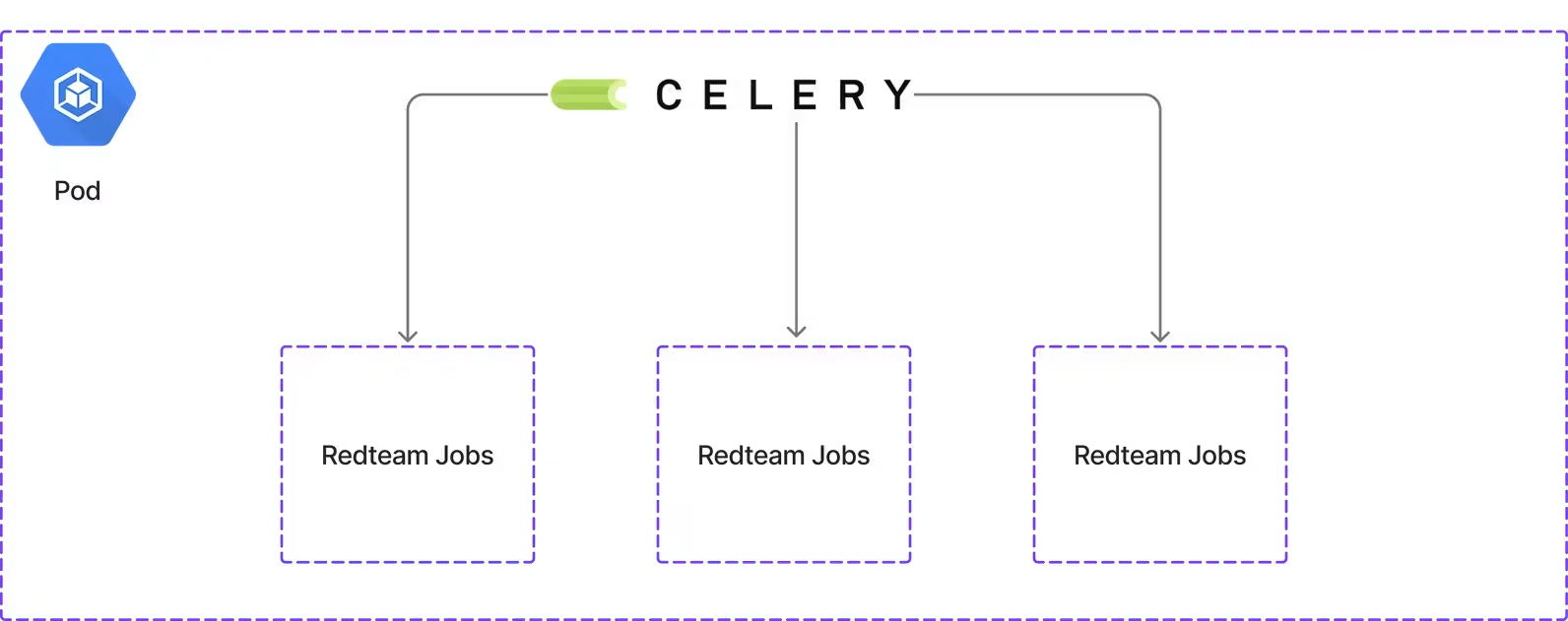

About Celery

Python Celery is an open-source distributed task queue. Developers use it to manage and execute background jobs or scheduled operations outside of a web request-response cycle. Instead of making users wait for time-consuming processes, Celery runs these tasks asynchronously, freeing up application resources and improving user experience.

In AI workloads, Celery can be valuable if designed properly. Machine learning models often require intensive computation, data preprocessing, or batch predictions. With Celery, you can delegate these jobs to background workers, allowing applications to remain responsive. However, for durable, long-running executions in cloud-native environments, other alternatives are often better suited.

Context: Why We Had to Migrate from Celery

Before diving deeper, let’s look at the old architecture. The red teaming service ran as a microservice on our Kubernetes cluster. Incoming requests came through an API gateway and were queued by a single Python service that combined Celery task processing with all other business logic. This monolithic design made it difficult to scale and consumed significant CPU and memory.

We observed the following problems with this setup:

- The Data Loss Nightmare: Whenever a pod failed—and failures were common—we lost all ongoing work. Even worse, our image updater would pull new container images, restart the pod, and erase hours of testing results. Telling the security team to rerun a six-hour vulnerability scan because “the pod restarted” was never easy.

- The Resource Black Hole: Every job, no matter how simple or complex, was allocated 11GB of memory. A basic port scan received the same hefty allocation as a demanding exploit chain. It was like using a freight truck to deliver a pizza. This also prevented us from using cheaper spot instances, forcing us to pay for on-demand instances.

- The Single Point of Failure: All tasks ran in one large pod. When it went down, everything stopped. We had no isolation and no graceful fallback—just a total system failure.

What are the Celery Alternatives?

In our search for a replacement, we considered the following alternatives:

Apache Airflow with KubernetesExecutor

Airflow is a workflow orchestration platform built for scheduling and monitoring data pipelines. With the KubernetesExecutor, each task runs in its own isolated Kubernetes pod.

- Pros: Strong UI for monitoring and visualizing workflows (DAGs), rich ecosystem of operators, excellent for data engineering, mature and widely adopted.

- Cons: Not designed for high-frequency or low-latency tasks, complex to set up, and primarily time-based rather than event-driven.

- Best for: Data pipelines, ETL jobs, and batch workflows that need robust scheduling and monitoring.

Temporal

Temporal is a workflow engine that ensures long-running business logic executes reliably. It uses a programming model where workflows are written as code and can survive process restarts.

- Pros: Highly resilient, developer-friendly (write workflows in regular code), supports event-driven and long-running processes (days or weeks).

- Cons: Adds operational overhead (requires a Temporal server), has a steeper learning curve, and is less suited for simple, fire-and-forget background jobs.

- Best for: Business process automation, order fulfillment, and complex, stateful operations.

Argo Workflows

Argo Workflows is a Kubernetes-native workflow engine where each workflow step runs as a pod.

- Pros: Deep Kubernetes integration, lightweight, scalable, and ideal for CI/CD or ML pipelines. Workflows are defined in YAML for easy version control.

- Cons: YAML definitions can become complex, requires containerizing all task logic, and has a more limited UI compared to Airflow.

- Best for: Kubernetes-native batch jobs, ML training pipelines, infrastructure automation, and CI/CD.

We were already using other projects from the Argo ecosystem, so Argo Workflows was a natural fit. A key requirement was to minimize changes to our existing codebase. To achieve this, we introduced NATS and a custom proxy service to bridge our existing application with the new workflow engine.

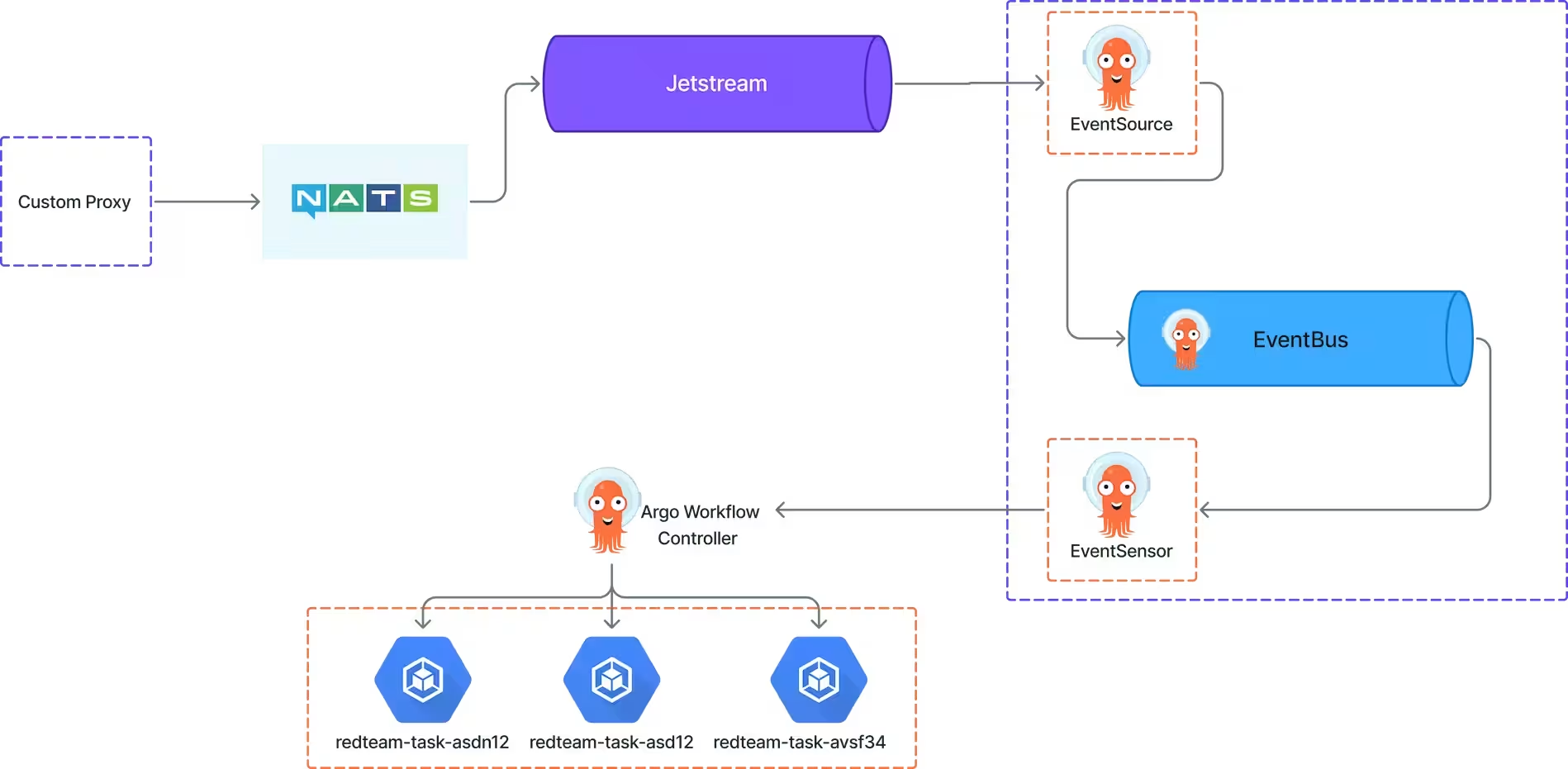

New Architecture with NATS and Argo Workflows

This new, event-driven architecture not only solved our reliability issues but also led to a dramatic 11x reduction in compute costs, allowing us to run eleven jobs for the price of one.

Here’s a detailed look at the new architecture.

The core components of this new system are a custom proxy, NATS JetStream for messaging, and Argo Events for triggering our workflows.

Custom Proxy and NATS Stream

We created a custom proxy that intercepts incoming job requests, enriches them with metadata from our database, and publishes them as events to a NATS JetStream. Using JetStream provides more control over message retention, duplication, and payload size.

We defined a Stream custom resource to manage all subjects related to our red teaming jobs.

apiVersion: jetstream.nats.io/v1beta2

kind: Stream

metadata:

name: workflows

namespace: argo-events

spec:

name: REDTEAM

subjects:

- 'redteamworkflows.*'

- 'attack.*'

- 'add-task.*'

- 'custom-task.*'

- 'generate-data.*'

- 'alignment-data.*'

storage: file

maxAge: 48h

maxMsgs: 10000

maxBytes: 5242880

duplicateWindow: 5m

retention: workqueue

description: 'Workflow events for creating redteam jobs'

yamlArgo Events Components

We used three key components from Argo Events to connect NATS to our Argo Workflows.

1. EventBus

The EventBus acts as a durable transport layer for events within the Argo Events system. We configured it to use NATS JetStream for an additional layer of reliability.

apiVersion: argoproj.io/v1alpha1

kind: EventBus

metadata:

name: redteam-eventbus

namespace: argo-events

spec:

jetstream:

version: 2.10.10

yaml2. EventSource

The EventSource is responsible for listening to external event producers—in our case, the NATS REDTEAM stream. It subscribes to specific subjects and forwards incoming messages to the EventBus.

(Note: The YAML for the EventSource is straightforward and subscribes to the subjects defined in our NATS stream. For brevity, we are omitting it here.)

3. Sensor

The Sensor listens for events on the EventBus and triggers actions. Our sensor listens for events from the EventSource and uses a template to submit a new Argo Workflow for each event. This is where the magic happens.

The payload from the NATS message is captured and passed as a parameter to the workflow.

apiVersion: argoproj.io/v1alpha1

kind: Sensor

metadata:

name: redteam-add-task-sensor

namespace: argo-events

spec:

dependencies:

- name: redteam-add-task

eventSourceName: redteam-add-task

eventName: redteam

eventBusName: redteam-eventbus

template:

serviceAccountName: argo-events-sa

triggers:

- template:

name: argo-workflow-trigger

argoWorkflow:

operation: submit

source:

resource:

apiVersion: argoproj.io/v1alpha1

kind: Workflow

metadata:

generateName: redteam-cloudraft-

namespace: redteam-jobs

spec:

entrypoint: process-order

arguments:

parameters:

- name: event-data

# The entire JSON body from the NATS message is passed here

value: '{{`{{.Input.body}}`}}'

templates:

- name: process-order

inputs:

parameters:

- name: event-data

container:

image: {{ .Values.sensor.jobImage }}:{{ .Values.sensor.jobversion}}

imagePullPolicy: Always

command:

- python

- demo-cloudraft-blog.py

env:

- name: WORKER_TYPE

value: "combined"

- name: NATS_URL

value: {{ .Values.sensor.natsURL }}

- name: IS_PROXY_MODE

value: "true"

- name: RT_NATS_PAYLOAD

# The event data is passed as an environment variable to the container

value: '{{`{{inputs.parameters.event-data}}`}}'

envFrom:

- secretRef:

name: redteam-proxy-env-secret

yamlThis Sensor configuration automatically creates a new job in the redteam-jobs namespace for each incoming NATS message. The resulting pod contains our application container along with an argoexec sidecar, which coordinates with the Argo controller to manage the workflow state.

Thanks to the Argo Workflows UI, we can now easily monitor, stop, and even rerun failed jobs. This replaces our old, error-prone process of manually re-triggering Celery tasks, which often led to out-of-memory (OOM) errors and instability.

Conclusion: A More Resilient and Cost-Effective Future

Migrating from Celery to a cloud-native architecture with Argo Workflows and NATS fundamentally transformed our red teaming platform. We moved from a fragile, resource-intensive system to a resilient, scalable, and cost-effective one.

The key benefits we unlocked include:

- Fault Tolerance: No more data loss from pod restarts. Argo Workflows ensures that our long-running jobs can survive failures and be retried.

- Resource Efficiency: By running each task in its own dedicated pod, we moved away from a monolithic memory allocation. This, combined with the ability to leverage spot instances, led to an 11x reduction in compute costs.

- Scalability & Isolation: Our system now scales dynamically based on real-time job demand, with each job isolated from the others, preventing a single failure from bringing down the entire system.

While Celery is a powerful tool for asynchronous tasks, our use case demanded the durability and cloud-native integration that a true workflow orchestrator provides. By embracing tools built for the Kubernetes ecosystem, we not only solved our immediate problems but also built a more robust foundation for the future.

Looking to improve your cloud native stack? Contact us to learn more about how we can help you achieve your goals.