Introduction

If you've ever found yourself knee-deep in a Kubernetes incident, watching a production microservice fail with mysterious 5xx errors, you know the drill: alerts are firing, dashboards are lit up like a Christmas tree, and your team is scrambling to make sense of a flood of metrics across every layer of the stack. It's not a question of if this happens—it's when.

In that high-pressure moment, the true challenge isn't just debugging—it's knowing where to look. For seasoned SREs and technical founders who live and breathe Kubernetes, the ability to quickly zero in on the right signals can make the difference between a five-minute fix and a five-hour outage.

So what are the metrics that actually move the needle? And how do you filter signal from noise when your platform is under fire?

This article breaks down the critical Kubernetes metrics that every high-performing team should keep an eye on—before the next incident catches you off guard.

If you don't have a monitoring system in place, you're already behind the curve. Kubernetes is a complex system with many moving parts, and without proper monitoring, you're flying blind. Engage us to help you set the right observability stack.

Why Every Minute Counts in Kubernetes Outages

When Kubernetes systems break, the impact isn't just technical but also it's financial, contractual, and reputational.

Real Cost of Downtime

According to Gartner, the average cost of IT downtime is $5,600 per minute, which adds up to over $330,000 per hour. We do not want to imagine this happening during peak traffic, a product launch, or a high-stakes client demo. The longer you spend guessing which part of the system failed, the more your business takes the hit. Often, it's not even clear whether the issue lies in the network, storage, or application layer, leading to costly delays in diagnosis and resolution.

Tight SLAs & Tighter Repercussions

For the teams managing Kubernetes clusters on behalf of clients, Service Level Agreements (SLAs) can feel like a sword overhead. These agreements set strict limits on factors like downtime and error rates, where breaching them doesn't just mean a few angry emails. It can lead to financial penalties, escalations, or even losing the client altogether. Without knowing which metrics reflect health and which signal red flags, they are always one step away from trouble.

Mean Time to Recovery

The Mean Time to Recovery (MTTR) is a critical KPI for SRE and DevOps teams. It reflects how long it takes to detect, troubleshoot, and restore service after a failure. A low MTTR means your systems are resilient and your team is effective. But reducing MTTR is only possible if you're looking at the right data when the incident hits, and that's where the top Kubernetes metrics come in.

That is exactly what this blog is here for. We will walk you through the most critical Kubernetes metrics to monitor, the ones that give you real insight into the health of your system, help reduce downtime, and improve your response during incidents. Whether you're running a small dev cluster or a complex multi-tenant setup, this guide will help you prioritize the right signals.

Significance of The Four Golden Signals

If you have spent any time in the world of monitoring or Site Reliability Engineering, you have probably come across the Four Golden Signals: Latency, Traffic, Errors, and Saturation. Originally popularized in the Google SRE book- Comprehensive guide to site reliability, these signals remain the gold standard when it comes to what you should measure to understand your system's health.

Even in Kubernetes environments where complexity multiplies with microservices, dynamic scaling, and distributed components, The Four Golden Signals help aim at the right target. They have a bold tie to our topic here. Hence, understanding them can help yield better understanding and results.

- Latency helps you detect slowdowns even before users start complaining about them. Metrics like API server latency or HTTP request durations show where bottlenecks are live.

- Traffic metrics (like request rate, network throughput) help you understand demand and stress levels across your system.

- Errors surface failing pods, HTTP 5xx rates, and crash loops are your early warning signs.

- Saturation tells you when you're about to hit resource limits, whether it's CPU, memory, or disk I/O on nodes and pods.

In a distributed system like Kubernetes, problems rarely announce themselves clearly. Golden Signals offer a language to interpret cluttered data, spot anomalies, and prioritize what truly needs fixing. Knowing how your app performs against these four dimensions makes your metrics strategy more focused, your alerts more meaningful, and your team more responsive.

The RED & USE Methods

Just like the Four Golden Signals, there are two other powerful frameworks that help teams make sense of their monitoring data, RED and USE. These methods offer a structured way to prioritize what to measure and where to look during troubleshooting. While Golden Signals give you a high-level overview of system health, RED and USE help you go deeper with intent, depending on whether you're debugging an application-level issue or digging into infrastructure problems.

RED Method For Applications & Services

The RED method focuses on user-facing services and microservices, and is all about how your application is performing from a user's perspective.It tracks three critical signals:

- Requests per second (traffic)

- Errors per second (failures)

- Duration of requests (latency)

Think of RED as your first defense against a bad user experience. It closely aligns with the Four Golden Signals and is commonly visualized using pre-built RED dashboards in tools like Prometheus and Grafana. For a deeper dive, check out the official blog on The RED Method by Tom Wilkie

USE Method For Infrastructure & System Health

The USE method is shaped to lower-level system resources such as nodes, disks, and network interfaces. It tracks:

- Utilization – How much of a resource is being used?

- Saturation – Is the resource at or near capacity?

- Errors – Are there any failures in the resource?

This is especially useful when you're debugging performance bottlenecks or checking node health in Kubernetes. For example, using the USE method, you might quickly spot a disk I/O bottleneck or excessive memory pressure on a node.

They complement each other and help you design focused dashboards, meaningful alerts, and faster incident response workflows. For a deeper dive, check out the official blog on The USE Method by Brendan Gregg.

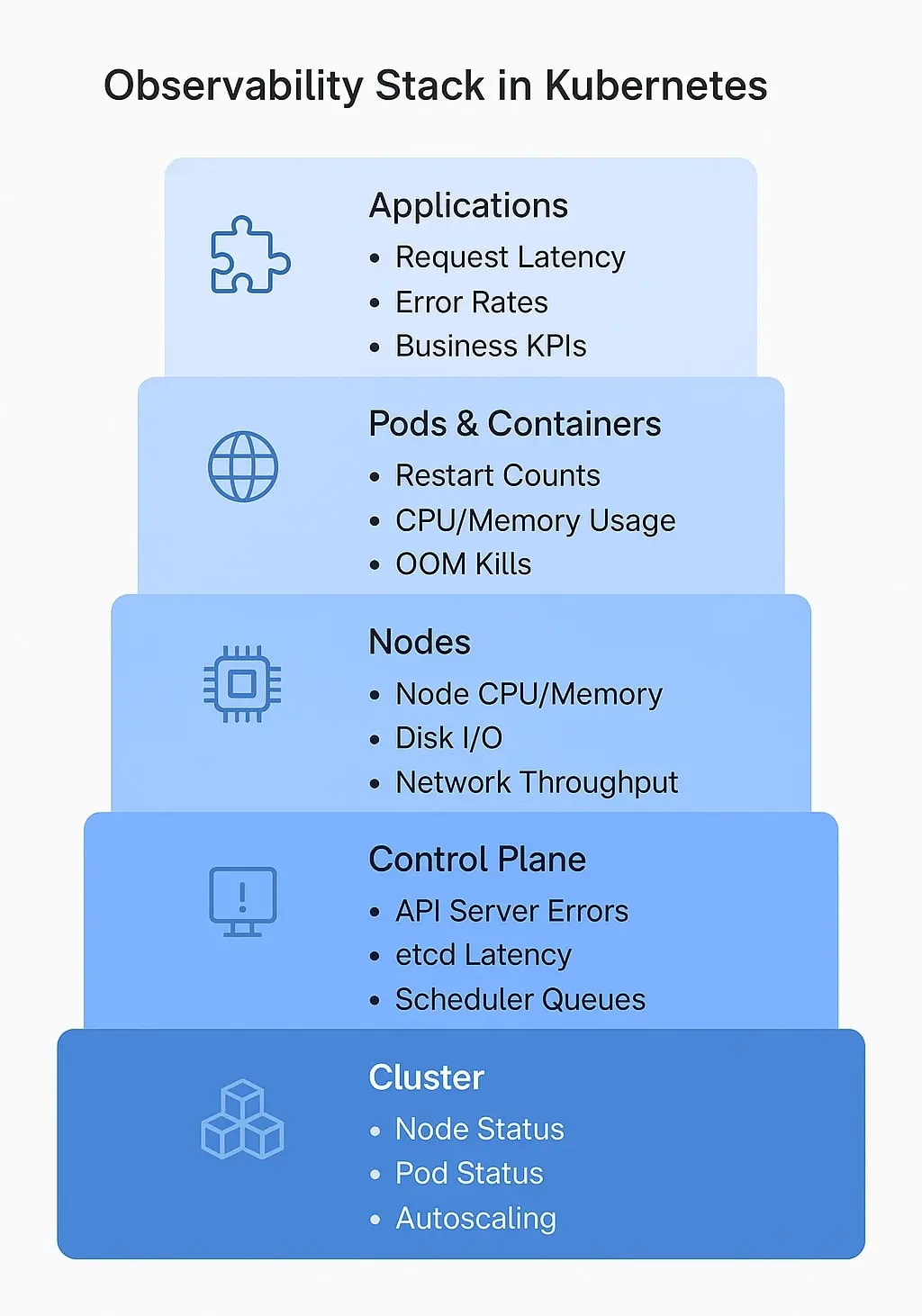

The Layers of Kubernetes Monitoring

Before we go deeper into metrics, it is important to understand where they come from. Kubernetes is a layered system, and each layer gives its own signals. If you want complete observability, you need to collect metrics from every layer.

Cluster Layer

This is the big-picture view. At this level, you track overall cluster health, how many nodes are active, how many are unschedulable, how many pods are in a crash loop, or if your autoscaler is working as expected. Metrics from the Kube Controller Manager, Cloud Controller, and Cluster Autoscaler belong here.

Control Plane

This is the brain of a cluster. Components like the API server, scheduler, and ETCD are responsible for making everything work. Metrics from this layer help you answer questions like "Is the scheduler under pressure?", "Is the ETCD server healthy and responding on time?", "Are API requests getting throttled?".

Nodes

These are the worker machines (virtual or physical) that run your workloads. Key node-level metrics include CPU, memory, disk I/O, and network throughput. If nodes are overloaded, your pods will suffer even if your app code is flawless.

Pods & Containers

This is the execution layer. Monitoring pod status, container restarts, resource requests/limits, and OOM (Out of Memory) kills can quickly tell you if your workloads are running as expected or if they're crashing silently in the background.

Applications

Finally, we reach the business logic that is the code you deploy. Application-level metrics include request latency, error rates, throughput, and custom business KPIs. These metrics help tie technical issues to user-facing problems, which is especially important when debugging customer-impacting incidents.

Kubernetes metrics that matter the most

Once you understand the layers of observability in Kubernetes, the next step is knowing what to look at in each layer. Not all metrics are created equal; some help you react quickly, while others help you prevent issues entirely. Here are the top metrics across each layer that monitoring teams should track.

Cluster-Level Metrics

Let’s say your Kubernetes cluster is experiencing performance issues; maybe workloads are failing to schedule, pods are restarting, or users are complaining about latency. Instead of jumping into individual pod metrics, let’s start from the top. Here’s a practical flow to investigate issues at the cluster level and narrow down potential root causes.

Confirm the Symptoms at Scale

Start with basic observations. Are these problems isolated or affecting the entire cluster?

- Check the number of unschedulable pods:

kubectl get pods --all-namespaces --field-selector=status.phase=Pending

bashOutput:

NAMESPACE NAME READY STATUS RESTARTS AGE

default myapp-frontend-7d8f9c6d8b-abcde 0/1 Pending 0 2m

kube-system coredns-558bd4d5db-xyz12 0/1 Pending 0 1m

bash- Pods are presented to be in a

Pendingstate. To determine the root cause for it do further investigation. Look for frequent restarts:

kubectl get pods --all-namespaces | grep -v '0/' | grep 'CrashLoopBackOff\|Error'

bashOutput:

NAMESPACE NAME READY STATUS RESTARTS AGE

default api-server-5d9f8f6d8b-xyz12 0/1 CrashLoopBackOff 5 10m

default db-service-7d8f9c6d8b-abcde 0/1 Error 3 8m

bash- Are nodes under pressure?

kubectl top nodes

bashOutput:

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

node-1 1800m 75% 7000Mi 85%

node-2 1500m 80% 6500Mi 90%

bashWe can see the node is under pressure. If multiple namespaces or workloads are impacted, it’s likely a cluster-level issue, not just an app problem.

Assess Node Health and Availability

Node problems ripple across the entire cluster. Let’s check how many are healthy:

kubectl get nodes

bashOutput:

NAME STATUS ROLES AGE VERSION

node-1 Ready worker 10d v1.25.0

node-2 NotReady worker 10d v1.25.0

bashWatch out for nodes in a NotReady or Unknown state, these can cause workload evictions, failed scheduling, and data plane failures.

If some nodes are out, look at recent cluster events:

kubectl get events --sort-by=.lastTimestamp

bashOutput:

LAST SEEN TYPE REASON OBJECT MESSAGE

2m Warning NodeNotReady node/node-2 Node is not ready

1m Warning FailedScheduling pod/myapp-frontend-xyz12 0/2 nodes are available: 1 node(s) were not ready

bashPay attention to messages like:

-

NodeNotReady -

FailedScheduling -

ContainerGCFailed

Detect Resource Bottlenecks

Even if nodes are "Ready," they might not have capacity. Check CPU and memory pressure:

kubectl describe nodes | grep -A5 "Conditions:"

bashOutput:

Conditions:

Type Status LastHeartbeatTime Reason

MemoryPressure True 2025-05-06T16:00:00Z KubeletHasInsufficientMemory

DiskPressure False 2025-05-06T16:00:00Z KubeletHasSufficientDisk

PIDPressure False 2025-05-06T16:00:00Z KubeletHasSufficientPID

bashLook for MemoryPressure, DiskPressure, or PIDPressure.

Check what resources the scheduler sees as allocatable:

kubectl describe node node-name | grep -A10 "Allocatable"

bashOutput:

Allocatable:

cpu: 2000m

memory: 8192Mi

pods: 110

bashIf everything looks maxed out, your cluster may be underprovisioned, then it’s time to scale nodes or clean up unused resources.

Investigate Networking or DNS Issues

Another issue likely faced is latency complaints or failing pod readiness probes often come down to network problems.

Use Prometheus Dashboards to find out:

rate(container_network_receive_errors_total[5m])

promqlCheck for CoreDNS issues:

kubectl logs -n kube-system -l k8s-app=kube-dns

bashOutput:

.:53

2025/05/06 16:05:00 [INFO] CoreDNS-1.8.0

2025/05/06 16:05:00 [INFO] plugin/reload: Running configuration MD5 = 1a2b3c4d5e6f

2025/05/06 16:05:00 [ERROR] plugin/errors: 2 123.45.67.89:12345 - 0000 /etc/resolv.conf: dial tcp: lookup example.com on 10.96.0.10:53: server misbehaving

bashSpot dropped packets or erratic latencies in inter-pod communication.

Connect the Dots

Now correlate your findings. Ask:

-

Are failing pods being scheduled on overloaded or failing nodes?

-

Are pods restarting due to OOMKills or image pull issues?

-

Do networking or DNS failures match the timing of user complaints?

By this point, a pattern should emerge. And you should be able to rule out the cause of the issue to be one of these reasons. By the example outputs from above we can rule out this to likely be a cluster-level problem caused by an over-utilized or partially unavailable node.

Control Plane Metrics

Let’s say you've ruled out node failures and cluster resource issues, but your workloads are still acting strange. Pods remain in Pending for too long, deployments aren’t progressing, and even basic kubectl commands feel sluggish.

That’s your signal that the control plane might be the bottleneck. Here is how to troubleshoot Kubernetes control plane health using metrics, and trace the problem back to its source.

Gauge API Server Responsiveness

The API server is the front door to your cluster. If it's slow, everything slows down; kubectl, CI/CD pipelines, controllers, autoscalers.

Check API server latency:

histogram_quantile(0.95, rate(apiserver_request_duration_seconds_bucket[5m]))

promqlA spike here means users and internal components are all experiencing degraded interactions.

Look for API Server Errors

Latency might be caused by underlying failures especially from ETCD, which backs all API state.

Check for 5xx errors from the API server:

rate(apiserver_request_total{code=~"5.."}[5m])

promqlA sustained increase could mean:

-

ETCD is overloaded or unhealthy

-

API server is under too much load

-

Network/storage latency is impacting ETCD reads/writes

If error rates correlate with latency spikes, check ETCD performance next.

Investigate Scheduler Delays

Maybe your pods are Pending and not getting scheduled even though nodes look healthy. This could be a scheduler problem, not a resource issue.

Check how long the scheduler is taking to place pods:

histogram_quantile(0.95, rate(scheduler_scheduling_duration_seconds_bucket[5m]))

promqlHigh values here = the scheduler is overwhelmed, blocked, or crashing.

Correlate this with pod age:

kubectl get pods --all-namespaces --sort-by=.status.startTime

bashOutput:

NAMESPACE NAME READY STATUS RESTARTS AGE

default myapp-frontend-7d8f9c6d8b-abcde 0/1 Pending 0 18m

default api-server-5d9f8f6d8b-xyz12 0/1 CrashLoopBackOff 5 20m

default db-service-7d8f9c6d8b-def45 0/1 Error 3 19m

kube-system coredns-558bd4d5db-xyz12 0/1 Pending 0 21m

bashNew pods are in Pending for over 15 minutes, suggesting the scheduler is delayed and API server isn’t responding fast enough to resource or binding requests. If new pods sit in Pending too long, this is your bottleneck.

Monitor Controller Workqueues

The Controller Manager keeps the desired state in sync; scaling replicas, rolling updates, service endpoints, etc. If it’s backed up, changes won’t propagate.

Look at the depth of workqueues:

sum(workqueue_depth{name=~".+"})

promqlMost Kubernetes controllers are designed to quickly process items in their workqueues. A queue depth of 0–5 is generally normal and healthy. It means the controller is keeping up. Short spikes (up to ~10–20) can occur during events like rolling updates or scaling, and are usually harmless if they drop quickly. Start investigating if:

- workqueue_depth stays above 50–100 consistently

- workqueue_adds_total keeps rising rapidly

- workqueue_work_duration_seconds shows long processing times

These symptoms suggest the controller is backed up, leading to delays in:

- Rolling out deployments

- Updating service endpoints

- Reconciling desired vs. actual state

Also check:

sum(workqueue_adds_total)

promqlavg(workqueue_work_duration_seconds)

promqlSpikes here mean your controllers are overloaded, possibly due to a flood of changes or downstream API slowdowns.

Pull it All Together

From the above example outputs we can conclude the issue to be ETCD and API server latency which is causing cascading delays in the control plane:

- Scheduler can’t assign pods quickly due to slow API server.

- Controller Manager queues are backing up as desired state changes (like ReplicaSet creations) take too long to commit.

- kubectl and system components (like CoreDNS or autoscalers) are affected by poor responsiveness from the API server, which relies on ETCD.

In general, let’s say you see:

-

High API latency

-

Elevated 5xx errors

-

Scheduler latency spikes

-

Controller queues backed up

When control plane metrics go bad, symptoms ripple through the whole system. Tracking these metrics as a cohesive unit helps you catch early signals before workloads break.

Node-Level Metrics: Digging into the Machine Layer

If control plane metrics look healthy but problems persist like pods getting OOMKilled, apps slowing down, or workloads behaving inconsistently; it’s time to inspect the nodes themselves. These are the machines that run your actual workloads. Here’s how to walk through node-level metrics to find the culprit.

Identify Which Nodes Are Affected

Start by getting a quick snapshot of node health:

kubectl get nodes

bashOutput:

NAME STATUS ROLES AGE VERSION

node-1 Ready worker 10d v1.25.0

node-2 NotReady worker 10d v1.25.0

bashLook for any nodes not in Ready state. If nodes are marked NotReady, Unknown, or SchedulingDisabled, that's your first signal. Then describe them:

kubectl describe node node-2

bashOutput:

Conditions:

Type Status LastHeartbeatTime Reason

MemoryPressure False 2025-05-06T16:00:00Z KubeletHasSufficientMemory

DiskPressure True 2025-05-06T16:00:00Z KubeletHasDiskPressure

PIDPressure False 2025-05-06T16:00:00Z KubeletHasSufficientPID

Taints:

node.kubernetes.io/disk-pressure:NoSchedule

bashDisk pressure is explicitly reported which is likely the source of pod issues. Focus on:

-

Conditions: Look for

MemoryPressure,DiskPressure, orPIDPressure -

Taints: Check if workloads are being prevented from scheduling

Check Resource Saturation

If nodes are Ready but workloads are misbehaving, they might just be under pressure. Get real-time usage:

kubectl top nodes

bashOutput:

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

node-1 1200m 60% 6000Mi 70%

node-2 800m 40% 5800Mi 68%

bashBased on the example output, CPU and memory are normal, likely the disk is the bottleneck. In general cases, look for:

-

High CPU%: Indicates throttling

-

High Memory%: Can cause OOMKills or evictions

If a node is maxed out, describe the pods on it:

kubectl get pods --all-namespaces -o wide | grep node-2

bashOutput:

default api-cache-678d456b7b-xyz11 0/1 Evicted 0 10m node-2

default order-db-7c9b5d49f-vx12c 0/1 Error 2 15m node-2

default analytics-app-67d945c78c-qwe78 0/1 CrashLoopBackOff 4 12m node-2

bashIdentify noisy neighbors or pods consuming abnormal resources. Multiple pods failing, evictions suggest disk pressure-based pod disruption.

Investigate Frequent Pod Restarts or Evictions

Pods restarting or getting evicted? Check the reason:

kubectl get pod pod-name -n namespace -o jsonpath="{.status.containerStatuses[*].lastState}"

bashOutput:

{"terminated":{"reason":"Evicted","message":"The node was low on disk."}}

bashCommon reasons:

-

OOMKilled: memory overuse -

Evicted: node pressure (memory, disk, or PID) -

CrashLoopBackOff: instability in app or runtime

Then verify which node they were running on, repeated issues from the same node point to a node-level problem.

Check Disk and Network Health

Some failures are subtler, slow apps, stuck I/O, DNS errors. These often come from disk or network bottlenecks.

Use Prometheus Dashboard:

Disk I/O:

rate(node_disk_reads_completed_total[5m])

promqlNetwork errors:

rate(node_network_receive_errs_total[5m])

promqlrate(node_network_transmit_errs_total[5m])

promqlThese can indicate bad NICs, over-saturated interfaces, or DNS resolution failures affecting pods on that node. If not, SSH into the node and use:

iostat -xz 1 3

bashExample Output:

evice: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await svctm %util

nvme0n1 0.00 12.00 50.00 250.00 1024.00 8192.00 60.00 8.50 30.00 1.00 99.90

bashAnd check:

dmesg | grep -i error

bashExample Output:

[ 10452.661212] blk_update_request: I/O error, dev nvme0n1, sector 768

[ 10452.661217] EXT4-fs error (device nvme0n1): ext4_find_entry:1463: inode #131072: comm kubelet: reading directory lblock 0

bashLook for high I/O wait, dropped packets, or NIC errors.

Review Node Stability & Uptime

Sometimes the issue is churn; nodes going up/down too frequently due to reboots or cloud spot termination.

Check uptime:

uptime

bashOutput:

16:15:03 up 2 days, 2:44, 1 user, load average: 5.12, 4.98, 3.80

bashOr with Prometheus:

node_time_seconds - node_boot_time_seconds

promqlFrequent reboots suggest infrastructure problems or autoscaler misbehavior. If it’s spot nodes, review instance interruption rates.

Correlate and Isolate

In this example case, node-2 is experiencing disk I/O congestion which is confirmed by DiskPressure, pod evictions due to low disk, iostat metrics showing 99%+ utilization and 30ms I/O latency, and kernel logs showing read errors. This node is the root cause of pod disruptions and degraded application behavior. However there can also be other factors. Let’s say you find:

-

One node has 90%+ memory usage

-

That node also shows disk IO spikes and network errors

-

Most failing pods are running on that node

Node-level issues are often the hidden root of noisy, hard-to-trace application problems. Always include node health in your diagnostic workflow; even when app logs seem to tell a different story.

Pod & Deployment-Level Issues (RED Metrics)

If node level metrics look healthy but problems persist like some pods are slow, users are getting errors, and latency seems off; it is time to check what is wrong at the pod or deployment level? Here’s how to tackle it.

Spot the Symptoms

Start by identifying which services or deployments are affected. Are users reporting:

-

Slow API responses?

-

Errors in requests?

-

Timeouts?

Correlate with actual service/pod behavior using:

kubectl get pods -A

bashOutput:

NAMESPACE NAME READY STATUS RESTARTS AGE

default auth-api-7f8b45dd8f-abc12 0/1 CrashLoopBackOff 5 10m

default auth-api-7f8b45dd8f-xyz89 0/1 CrashLoopBackOff 5 10m

default payment-api-6f9c7f9b44-123qw 1/1 Running 0 20m

bashLook for pods in CrashLoopBackOff, Pending, or Error states. For example here, auth-api pods are failing that means something is wrong with that deployment.

Check the Request Rate

This tells you if the service is even receiving traffic, and whether it suddenly dropped.

If you're using Prometheus + instrumentation (e.g., HTTP handlers exporting metrics):

rate(http_requests_total[5m])

promqlLook for a sharp drop in traffic it might mean the pod isn’t even reachable due to readiness/liveness issues or misconfigured ingress.

Also check the load balancer/ingress controller logs (e.g., NGINX, Istio) for clues.

Check the Error Rate

This reveals if pods are throwing 5xx or 4xx errors, a sign of broken internal logic or downstream service failures.

rate(http_requests_total{status=~"5.."}[5m])

promqlAlso inspect the pods:

kubectl logs pod-name

bashOutput:

Error: Missing required environment variable DATABASE_URL

at config.js:12:15

at bootstrapApp (/app/index.js:34:5)

...

bashLook for exceptions, failed database calls, or panics. Here we see the pod is crashing due to missing DATABASE_URL, which might be a config issue during deployment.

Use kubectl describe pod for events like:

-

Failing readiness/liveness probes

-

Container crashes

-

Volume mount errors Example Output:

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning Unhealthy 2m (x5 over 10m) kubelet Readiness probe failed: HTTP probe failed with statuscode: 500

Warning BackOff 2m (x10 over 10m) kubelet Back-off restarting failed container

bashCheck the Request Duration (Latency)

High latency with no errors means something is slow, not broken.

histogram_quantile(0.95, rate(http_request_duration_seconds_bucket[5m]))

promqlIf request durations spike:

-

Check if dependent services (e.g., database, Redis) are under pressure

-

Use tracing tools (e.g., Jaeger, OpenTelemetry) if set up

Look at CPU throttling with:

kubectl top pod

bashOutput:

NAME CPU(cores) MEMORY(bytes)

auth-api-7f8b45dd8f-abc12 15m 128Mi

payment-api-6f9c7f9b44-123qw 80m 200Mi

bashBased on the scenario we considered, there is no resource throttling or usage issues, the crash is logic-related, not pressure-related. And in Prometheus:

rate(container_cpu_cfs_throttled_seconds_total[5m])

promqlCorrelate with Deployment Events

Sometimes your pods are healthy but something changed in the deployment process (bad rollout, config error).

Check rollout history:

kubectl rollout history deployment deployment-name

bashExample Output:

deployment.apps/auth-api

REVISION CHANGE-CAUSE

1 Initial deployment

2 Misconfigured env var for DATABASE_URL

bashSee if a new revision broke things. If yes:

kubectl rollout undo deployment auth-api

bashOutput:

deployment.apps/auth-api rolled back

bashAlso review deployment description for more information:

kubectl describe deployment auth-api

bashOutput:

Name: auth-api

Namespace: default

Replicas: 2 desired | 0 updated | 0 available | 2 unavailable

StrategyType: RollingUpdate

Conditions:

Type Status Reason

---- ------ ------

Progressing False ProgressDeadlineExceeded

Available False MinimumReplicasUnavailable

Environment:

DATABASE_URL: <unset>

bash-

Were all replicas successfully scheduled?

-

Did resource limits or readiness probes cause issues?

Spot Trends in Replica Behavior

If you suspect scaling problems (e.g., not enough replicas to handle load):

sum(kube_deployment_spec_replicas) by (deployment)

promqlsum(kube_deployment_status_replicas_available) by (deployment)

promqlMismatch in these means rollout issues, pod crashes, or scheduling failures.

Final Diagnosis

By following this flow, you’ll isolate whether your pods are:

-

Unavailable (readiness or probe issues)

-

Throwing errors (broken logic, bad config)

-

Slow (upstream delays, resource throttling)

-

Or unstable (bad rollout, crashing containers)

Troubleshooting Application-Level Issues

If pods are running fine, nodes are healthy, no deployment issues, but users are still complaining then something could be wrong in the app itself. At this stage, the cluster looks fine, but it’s likely an internal app logic, dependency, or performance issue. So here’s how to troubleshoot it.

Trace the Symptoms from the Top

What are users actually experiencing?

-

Is a specific endpoint slow?

-

Is authentication failing?

-

Are pages timing out intermittently?

Start by querying RED metrics from your app’s own observability (assuming it's instrumented with Prometheus, OpenTelemetry, etc.):

- Request rate per endpoint:

rate(http_requests_total{job="your-app"}[5m])promql

- Error rate (e.g., 4xx/5xx):

rate(http_requests_total{status=~"5.."}[5m])promql

- Latency distribution:

histogram_quantile(0.95, rate(http_request_duration_seconds_bucket{job="your-app"}[5m]))promql

This will quickly show which part of your app is misbehaving.

Use Traces to Follow the Journey

If metrics are the "what", traces are the "why."

Use tracing (Jaeger, Tempo, or OpenTelemetry backends) to:

-

Trace slow or failed requests

-

Identify downstream service delays (e.g., DB, external APIs)

-

Measure time spent in each span

Look for patterns like:

-

Long DB query spans

-

Retries or timeouts from third-party APIs

-

Deadlocks or slow code paths

Profile Resource-Intensive Paths

Sometimes, the issue is an internal performance bug like memory leaks, CPU spikes, or thread contention.

Use profiling tools like:

-

Pyroscope, Parca, Go pprof, or Node.js Inspector

-

Flame graphs to visualize CPU/memory hotspots

Check Dependencies & DB Metrics

Your app might be healthy, but its dependencies might not be.

-

Is the database under pressure?

rate(mysql_global_status_threads_running[5m])promql -

Are Redis queries timing out?

rate(redis_commands_duration_seconds_bucket[5m])promql -

Are queue workers backed up?

sum(rabbitmq_queue_messages_ready)promql

Also watch for:

-

Connection pool exhaustion

-

Slow queries

-

Locks or deadlocks

Even subtle latency in DB or cache can bubble up as app slowdowns.

External Services or 3rd Party APIs

Check whether your app relies on:

-

Payment gateways

-

Auth providers (like OAuth)

-

External APIs (e.g., geolocation, email, analytics)

Use Prometheus metrics or custom app logs to track:

-

Latency of external calls

-

Error rates (timeouts, HTTP 503s)

-

Retry storms

Add circuit breakers or timeouts to avoid cascading failures.

Validate Configuration & Feature Flags

Sometimes the issue is human:

-

Was a feature flag turned on for everyone?

-

Did a bad config rollout silently break behavior?

-

Was a critical env var left empty?

Review:

kubectl describe deployment your-app

bashExample Output:

Name: your-app

Namespace: default

CreationTimestamp: Mon, 06 May 2025 14:21:52 +0000

Labels: app=your-app

Selector: app=your-app

Replicas: 3 desired | 3 updated | 3 total | 3 available | 0 unavailable

StrategyType: RollingUpdate

Conditions:

Type Status Reason

---- ------ ------

Available True MinimumReplicasAvailable

Progressing True NewReplicaSetAvailable

Pod Template:

Containers:

your-app:

Image: ghcr.io/your-org/your-app:2025.05.06

Port: 8080/TCP

Environment:

FEATURE_BACKGROUND_REINDEXING: "true"

DATABASE_URL: "postgres://db.svc.cluster.local"

Mounts:

/etc/config from config-volume (ro)

/etc/secrets from secret-volume (ro)

Volumes:

config-volume:

ConfigMapName: app-config

secret-volume:

SecretName: db-secret

bashCheck env vars, configMaps, and secret mounts. Also audit Git or your config source of truth. In the above example output, all pods are healthy, rollout was successful but the environment variable FEATURE_BACKGROUND_REINDEXING is enabled, likely triggering background operations that were not meant for production, causing performance regressions.

Final Diagnosis

If you’ve ruled out infrastructure and Kubernetes mechanics, your issue is almost certainly in:

-

Business logic

-

Misbehaving external systems

-

Unoptimized code paths

-

Bad configs or feature toggles

With solid RED metrics, tracing, profiling, and dependency checks you’ll isolate the slowest or weakest part of the app lifecycle.

Common Challenges in Monitoring Kubernetes

Monitoring a Kubernetes environment isn't just about scraping some metrics and throwing them into dashboards. In real-world scenarios especially in large-scale, multi-team clusters there are unique challenges that can cripple even the best monitoring setups. Here are some of the most common ones that teams face:

Metric Overload

With so many layers that are clusters, nodes, control planes, pods, apps, it's easy to end up with thousands of metrics. But more metrics is not equal to better observability. Without a clear signal-to-noise ratio, teams get stuck chasing anomalies that don't matter, while missing critical signals that do.

Inconsistent Metric Sources

Kubernetes components expose metrics in different formats and via different tools (Prometheus, ELK/EFK Stack, etc). This fragmentation can lead to incomplete or duplicated data, and sometimes even conflicting insights, making root cause analysis harder.

Multi-Tenancy Complexity

In shared clusters, multiple teams deploy and monitor their own apps. Without clear namespacing, labeling, and role-based access, it becomes hard to isolate responsibility or debug performance issues without stepping on each other's toes.

Scaling Problems

At smaller scales, you might get by with basic dashboards. But as your workloads grow, so do the cardinality of metrics, storage costs, and processing load on your observability stack. Without a scalable monitoring setup, you risk cluttered dashboards and missed alerts.

Monitoring the Monitoring System

Ironically, one of the most overlooked gaps is keeping tabs on your observability stack itself. What happens if Prometheus crashes? Or if your alert manager silently dies? Monitoring the monitor ensures you're not blind when it matters the most.

Break-Glass Mechanisms

Sometimes, no matter how well things are set up, you need to bypass the dashboards and go straight to logs, live debugging, or kubectl inspections. Having a documented "break-glass" process with emergency steps to dig deeper can save time during production outages.

How to Overcome These Challenges With Best Practices

While Kubernetes observability can feel overwhelming, a thoughtful strategy and the right tools can make all the difference.

Focus on High-Value Metrics

Instead of tracking everything, prioritize Golden Signals, RED/USE metrics, and metrics tied to SLAs and SLOs. Create dashboards with intent, not clutter.

Standardize Your Metric Sources

Use a centralized metrics pipeline, typically Prometheus, with exporters like kube-state-metrics, node exporter, and custom app exporters. Stick to consistent naming conventions and labels to avoid confusion across teams.

Use Labels & Namespaces Effectively

Organize metrics by namespace, team, or application, and apply proper labels to distinguish tenants. Use tools like Prometheus' relabeling and Grafana's variable filters to slice metrics cleanly per use case.

Design for Scale

Enable metric retention policies, recording rules, and downsampling. Consider remote write to long-term storage (like Thanos or Grafana Mimir) for large environments. Test how your dashboards perform under load.

Monitor Your Monitoring

Set up alerts for your observability stack (e.g., “Is Prometheus scraping?”, “Is Alertmanager up?”). Include basic health checks for Grafana, Prometheus, exporters, and data sources.

Establish "Break-Glass" Documents

Have documented steps for when observability fails, like which logs to tail, which kubectl commands to run, or how to access emergency dashboards. Practice chaos drills so everyone knows what to do.

Tools That Help You Monitor These Metrics

Understanding what to monitor is only 50% task; the other 50% is how you actually collect, store, and visualize that data in a scalable and insightful way. The Kubernetes ecosystem has a rich set of observability tools that make this easier.

Prometheus and Grafana

- Prometheus is the high standard for scraping, storing, and querying time-series metrics in Kubernetes.

- Grafana lets you visualize those metrics and set up alerting.

- With exporters like `node-exporter` and `kube-state-metrics` you can cover everything from node health to pod status and custom application metrics.

Best for teams looking for full control, custom dashboards, and open-source extensibility.

kube-state-metrics This is a service that listens to the Kubernetes API server and generates metrics about the state of Kubernetes objects like deployments, nodes, pods, etc. It complements Prometheus by exposing high-level cluster state metrics (e.g., number of ready pods, desired replicas, node conditions). Best for the cluster-level insights and higher-order metrics.

External Monitoring Services (VictoriaMetrics, Jaeger, OpenTelemetry, etc) These open source tools form a powerful observability stack for Kubernetes environments. VictoriaMetrics handles efficient metric storage, OpenTelemetry standardizes tracing and metrics across services, and with Jaeger engineers can monitor and troubleshoot with distributed transaction monitoring. Together, they give you flexibility, cost savings, and full control over your monitoring pipeline, without vendor lock-in.

Conclusion

Gathering data is only one aspect of monitoring Kubernetes; another is gathering the appropriate data so that prompt, well-informed decisions can be made. Knowing which metrics are your best defense, whether you're a platform team scaling across clusters, a DevOps engineer optimizing performance, or a Site Reliability engineer fighting a late-night outage.

That is why having a well-defined observability strategy, one that cuts through clutter, highlights what is needed, and adapts as your architecture evolves is no longer optional. Teams are increasingly turning to frameworks, tooling, and purpose-built observability solutions that support this shift toward proactive, insight-driven operations. At the end of the day, metrics are your map, but only if you're reading the right signs. Focus on these key signals, and you'll spend less time digging through data and more time solving real problems.