Introduction

In today's data-driven era, there are numerous modern applications that need to handle a continuous stream of incoming data. For instance, a financial trading platform processes thousands of transactions every second, an IoT system collects sensor readings from numerous devices, an e-commerce site tracks every user interaction, and a security system logs real-time events. In all these cases, the database is always getting new data written to it.

PostgreSQL is robust by design, but to handle high-throughput write workloads, it needs careful planning and smart tuning.

Though PostgreSQL is known for its reliability and scalability, it still requires constant optimization practices. Query optimization plays an important role when it comes to performance issues impacting the efficiency of the database affecting end users. Some of the causes can be due to inefficient queries, insufficient or over indexing, usage of inappropriate data types, high transaction volumes, hardware limitations or locks. With efficient planning such performance issues can cause less impact.

In this blog, we'll walk through a practical, step-by-step approach to optimizing PostgreSQL for high-ingestion systems. We'll cover how to choose the right infrastructure, tune essential database parameters like memory and WAL settings, schema design strategies and how to do effective query tuning. Whether we run PostgreSQL on a cloud platform or on an on-premise, this guide will help to build a resilient system that can handle heavy write workloads.

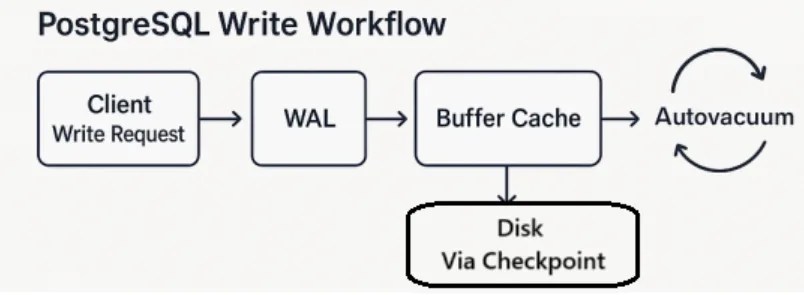

How PostgreSQL Handles Writes

Before you start tuning, it's important to understand the write processes in PostgreSQL:

-

Write-Ahead Logging (WAL): PostgreSQL first writes every change to a log file (WAL) before applying it to tables. - Though it adds some extra write work, this protects data during crashes.

-

Shared Buffers (Buffer Cache): PostgreSQL keeps recently changed data in memory to speed things up. The performance can slow down, if memory is low.

-

Checkpoints: PostgreSQL pushes all in-memory changes to disk at regular intervals. This can cause a sudden spike in disk activity.

-

Autovacuum: PostgreSQL automatically cleans up obsolete data in the background to keep queries fast.

Step 1: Tuning PostgreSQL Write Parameters

PostgreSQL's default settings are usually good for general usage, but it may require further tuning for a write-intensive workload. You can tune key parameters as discussed below.

WAL & Checkpoint Configuration

ALTER SYSTEM SET wal_level = 'replica'; -- Enables replication or Use 'logical' for decoding

ALTER SYSTEM SET wal_compression = 'on';

ALTER SYSTEM SET wal_buffers = '-1'; -- Let PostgreSQL auto-tune WAL buffers

ALTER SYSTEM SET wal_buffers = '64MB'; -- Manual setting for high write workloads

ALTER SYSTEM SET min_wal_size = '2GB'; -- Preallocate WAL to reduce I/O spikes

ALTER SYSTEM SET max_wal_size = '8GB'; -- allows longer checkpoint intervals, reducing I/O spikes

ALTER SYSTEM SET wal_writer_delay = '200ms'; -- WAL writer flush interval

ALTER SYSTEM SET wal_writer_flush_after = '1MB'; -- Flush WAL after 1MB of writes

SELECT pg_reload_conf(); -- Apply changes without restart

sqlThese settings optimize WAL performance by reducing I/O overhead and improving checkpoint management.

Reference - PostgreSQL 17 Documentation – WAL Configuration

Checkpoint Configuration

- Checkpoint settings are configured to prevent sudden disk load.

ALTER SYSTEM SET checkpoint_timeout = '15min';

ALTER SYSTEM SET checkpoint_completion_target = '0.9';

sqlSpreading out checkpoints at intervals helps PostgreSQL to avoid sudden spikes in disk activity.

Parallel Processing Configuration

PostgreSQL can divide complex operations across multiple CPU cores to work more efficiently.

ALTER SYSTEM SET max_worker_processes = 8;

ALTER SYSTEM SET max_parallel_workers = 6; -- Leave 2 cores for OS/other processes

ALTER SYSTEM SET max_parallel_workers_per_gather = 4;

ALTER SYSTEM SET max_parallel_maintenance_workers = 4;

sqlThese settings control parallel processing with 8 total workers (using 4 per query/maintenance). We have to adjust worker counts based on our CPU cores.

Memory & Caching

- Sufficient memory is needed to execute work efficiently.

For example, on a system with 32GB of RAM:

ALTER SYSTEM SET shared_buffers = '8GB'; -- ~25% of total RAM

ALTER SYSTEM SET effective_cache_size = '24GB'; -- ~75% of total RAM

ALTER SYSTEM SET work_mem = '64MB';

ALTER SYSTEM SET maintenance_work_mem = '2GB';

SELECT pg_reload_conf(); -- Apply changes without restarting

sqlThe above parameter configuration helps in the better use of RAM, improving throughput and reducing the reliance on disk.

Step 2: Schema Design for High Write Throughput

The database table design has a direct impact on the write speed. Below can be considered for high-write systems.

Use Time-Based Partitioning

The partitioning of large tables by a timestamp column will reduce write latency and index maintenance:

CREATE TABLE events (

id BIGSERIAL,

created_at TIMESTAMPTZ,

payload JSONB

) PARTITION BY RANGE (created_at);

sqlReference - PostgreSQL 17 Documentation – Table Partitioning

Smart Indexing

Unnecessary indexes are to be avoided. Any extra/unnecessary indexes slow down inserts and updates in the database.

-

Use BRIN indexes for append-only time-series data:

CREATE INDEX idx_events_brin ON events USING BRIN (created_at);sql -

Use partial indexes to target specific query patterns:

CREATE INDEX idx_active ON users (user_id) WHERE status = 'active';sql

Step 3: Write More Efficient SQL

Efficient SQL is as important as tuning the database.

Batch Inserts

Group multiple inserts into a single statement:

INSERT INTO logs VALUES (...), (...), (...);

sqlUse COPY for Bulk Loads

\copy logs(col1, col2) FROM 'data.csv' WITH (FORMAT csv, HEADER);

sqlThe COPY command can be up to 10 times faster than using multiple INSERT statements, especially when dealing with large datasets.

Group Transactions

BEGIN;

INSERT INTO logs ...;

INSERT INTO logs ...;

COMMIT;

sqlThis reduces transaction overhead and increases efficiency.

Avoid SELECT * in Queries

Using SELECT * may look convenient and easy, but it isn't a good choice especially in systems with high-writes.

Implications of using SELECT * below

- It fetches all columns, even the unnecessary columns.

- Consumes extra memory, I/O, and network bandwidth

- Reduces the PostgreSQL ability to use indexes efficiently.

- Can cause issues when new columns are added in future.

Hence It is always recommended to pick only the columns needed.

SELECT user_id, action_type, action_timestamp FROM user_activity_log

WHERE action_timestamp > NOW() - INTERVAL '1 hour';

sqlQuery Planner Settings

ALTER SYSTEM SET default_statistics_target = 100;

ALTER SYSTEM SET random_page_cost = 1.1;

ALTER SYSTEM SET effective_io_concurrency = 200;

sqlThese PostgreSQL parameters optimise query planning for modern SSD-based systems by improving statistics accuracy, adjusting cost models for fast storage, and enabling better parallel I/O utilization.

Step 4: Monitor Continuously

The database is to be monitored regularly to track any problems at the earliest possible and to keep it healthy. As always, performance tuning is never a one-time activity.

Key Metrics to Track:

-

IOPS (Input/Output Operations per Second): If your disk usage crosses 80%, it's the time to upgrade the disk to avoid slowdowns.

-

WAL Generation (Write-Ahead Logs): A very high WAL generation rate means too many small writes are happening inefficiently. You need to optimize queries or batch operations better.

-

Checkpoint Frequency: Frequent checkpoints increase disk I/O pressure, while infrequent checkpoints lead to slower crash recovery. You should tune checkpoint settings to balance disk usage and recovery time.

-

Connection Management: In PostgreSQL, every connection (user, application, or API) uses server memory and CPU. Too many open connections at the same time can overload the server.

To fix: Install PgBouncer, a lightweight connection pooler. it sits between your app and PostgreSQL - like a smart traffic controller.

- Instead of each app/user creating a new connection directly to the database, they connect to PgBouncer.

- PgBouncer maintains a fixed pool of PostgreSQL connections and reuses them across many clients.

- This saves a lot of memory and CPU.

Reference - PgBouncer GitHub

ALTER SYSTEM SET max_connections = 100;

SELECT pg_reload_conf();

sqlThis configuration supports 100 concurrent OLTP connections with optimal memory allocation.

Tools That Help You Monitor:

-

Query Performance Tracking (Built-in PostgreSQL Tools):

-

pg_stat_statements: Shows which queries took the most total time.

SELECT query, calls, total_time, mean_time FROM pg_stat_statements ORDER BY total_time DESC LIMIT 5;sqlReference - pg stat statements – Track SQL Execution Stats

-

pg_stat_bgwriter: Helps analyze background writes and checkpoint behavior.

SELECT * FROM pg_stat_bgwriter;sqlReference - pg stat bgwriter – Background Writer Stats

-

pg_stat_activity: Shows all active connections - who's connected, from where, and what SQL they are running.

SELECT pid, username, state, query FROM pg_stat_activity WHERE state != 'idle';sql

-

-

Cloud Monitoring Tools: If PostgreSQL is hosted on a cloud platform (like AWS, GCP, DigitalOcean, etc.), then most providers offer built-in monitoring dashboards. These help track key metrics like:

- CPU usage

- Memory consumption

- Disk IOPS

- Connection count

You can also set up additional alerts with a few clicks:

- Choose a metric (e.g., CPU%, number of active connections)

- Set a threshold

- Define what happens (send email, trigger webhook, etc.)

Logging And Monitoring Configuration

ALTER SYSTEM SET log_min_duration_statement = 1000;

ALTER SYSTEM SET log_checkpoints = on;

ALTER SYSTEM SET log_lock_waits = on;

ALTER SYSTEM SET log_connections = on;

ALTER SYSTEM SET log_disconnections = on;

ALTER SYSTEM SET deadlock_timeout = '1s';

SELECT pg_reload_conf();

sqlThese settings enhance PostgreSQL performance monitoring by logging slow queries, checkpoints, lock waits, connection activity, and enabling quicker deadlock detection.

Step 5: Autovacuum Tuning

The below configuration helps in auto-cleanup though in high-write systems the default settings don't do enough.

ALTER SYSTEM SET autovacuum_max_workers = 6;

ALTER SYSTEM SET autovacuum_naptime = '10s';

ALTER SYSTEM SET autovacuum_vacuum_cost_limit = 2000; -- default is 200

ALTER SYSTEM SET autovacuum_vacuum_threshold = 100;

ALTER SYSTEM SET autovacuum_analyze_threshold = 100;

sqlAlso, override per-table:

ALTER TABLE big_table SET (

autovacuum_vacuum_scale_factor = 0.1,

autovacuum_analyze_scale_factor = 0.05

);

sqlThis makes the autovacuum earlier, before things get bloated.

Weekly Maintenance – VACUUM & ANALYZE

Write-heavy tables bloat over time regular cleanup is essential.

Weekly Maintenance Script

The PostgreSQL query planner relies on fresh statistics to make smart decisions.

DO $$

DECLARE tbl RECORD;

BEGIN

FOR tbl IN

SELECT schemaname, tablename

FROM pg_tables

WHERE schemaname NOT IN ('pg_catalog', 'information_schema')

LOOP

EXECUTE format('ANALYZE %I.%I', tbl.schemaname, tbl.tablename);

END LOOP;

END $$; -- This script loops through all user tables and updates statistics like row counts, dead/live tuples, and column data distribution.

sqlCleanup by VACUUM

Vacuum cleans the obsolete data / dead rows that are left behind post any updates/deletes. The space is reclaimed, the table size is shrunk accordingly, and the index usage is efficient.

DO $$

DECLARE tbl RECORD;

BEGIN

FOR tbl IN

SELECT schemaname, tablename

FROM pg_tables

WHERE schemaname NOT IN ('pg_catalog', 'information_schema')

LOOP

-- Always refresh stats

EXECUTE format('ANALYZE %I.%I', tbl.schemaname, tbl.tablename);

-- Conditionally vacuum if the table has had heavy modifications

IF EXISTS (

SELECT 1

FROM pg_stat_user_tables

WHERE schemaname = tbl.schemaname

AND relname = tbl.tablename

AND n_mod_since_analyze > 10000

) THEN

EXECUTE format('VACUUM VERBOSE %I.%I', tbl.schemaname, tbl.tablename);

END IF;

END LOOP;

END $$; -- If a table had more than 10,000 modifications, VACUUM clears out the dead rows.

sqlThis can be automated as a script using a cron job to run weekly.

Real-World Case Study: IoT Data Ingestion at Scale

Scenario: An IoT platform collecting sensor data from 15,000+ devices every 5 seconds.

Solution Setup:

- Choose right-sized memory, WAL & checkpoint Settings

- Partitioned table by month

- Lightweight BRIN Index on the timestamp column.

- Batched incoming data and loaded them using COPY every 10 seconds.

- Used PgBouncer for Connection Pooling.

- Tracked metrics like WAL size, IOPS, checkpoint frequency, and connection count.

- Automated VACUUM and ANALYZE jobs weekly.

Validating the Impact

The improvements were verified using PostgreSQL's built-in observability tools and custom dashboards:

-

With pg_stat_statements tracked average latency and total execution time of INSERT operations.

-

Using pg_stat_bgwriter checkpoint behavior has been measured before and after tuning.

-

With pg_wal_lsn_diff() analysed WAL generation volume over time from historical logs.

-

Grafana dashboards revealed the trends in connection usage, autovacuum timing, and ingestion latency is visualized across a 4-week window.

All metrics were consistently tracked before and after the tuning phase, confirming statistically significant improvements without impacting data accuracy or reliability.

Results

| Metric | Before | After | Improvement |

|---|---|---|---|

| Insert Speed | 2,000/sec | 12,000/sec | 6× faster |

| Latency | 450 ms | 85 ms | 81% reduction |

| WAL Size/Cost | 10 GB | 6 GB | 40% smaller |

This kind of effective tuning delivers: higher performance, lower latency, and reduced costs.

Conclusion

PostgreSQL can handle heavy write workloads only if it's properly planned and smartly tuned. Though PostgreSQL is strong, reliable and scalable, the default config settings are meant only for general workloads and might not work efficiently in systems that deal with frequent inserts or updates.

High-ingestion systems (handling lots of writes) need more than the default PostgreSQL settings. It requires memory allocation optimization, fine tuning of WAL and checkpoint behavior, and designing schemas for performance: use partitioning, keep indexes minimal, and batch inserts to reduce overhead. Additionally, write efficient SQL that aligns with PostgreSQL's concurrency model.

Optimization is not a one-time task but rather a continuous practice. Regularly monitoring metrics, adjusting parameters based on observed load and automate maintenance routines like VACUUM and ANALYZE.

Every workload is different hence always suggesting to test the configuration changes first in a staging environment, measure the real-world traffic and refine based on the insights provided by metrics.

With the right tuning which involves the proper understanding and addressing various factors PostgreSQL can easily scale up, deliver 5-10x better throughput and maintain strong data integrity and system stability even under the heaviest write pressures.

Struggling with Postgres performance?

Our team can help with Optimization and Scaling your PostgreSQL database