Introduction

The world of Site Reliability Engineering (SRE) and cloud-native operations is undergoing a fundamental paradigm shift. Traditional manual and static automation methods can no longer keep pace with the accelerating complexity and scale of modern Kubernetes environments. Across the industry, we’re seeing an explosion of new approaches: from AI copilots like ChatGPT MCP, to orchestration frameworks like LangChain, to adaptive observability and remediation platforms. Together, they signal a move toward a more intelligent, autonomous ecosystem.

Enter Agentic AI, a new frontier where agents don’t just execute commands but actively plan, reason, and collaborate to solve operational challenges. In this landscape, kagent stands out as a purpose-built AI platform designed to revolutionize Kubernetes operations. At its core, kagent’s architecture is powered by the Multi-Channel Protocol (MCP) and a multi-agent reasoning model, enabling seamless tool integration and dynamic agent-to-agent collaboration. The result: cloud-native operations that are smarter, faster, and truly autonomous.

What is Kagent?

kagent is an open-source AI platform purpose-built for cloud-native operations, especially Kubernetes. Unlike traditional automation tools that follow rigid workflows or chatbots that only provide scripted responses, kagent’s AI agents think critically, analyze deeply, and act decisively. It grew directly out of real enterprise incident experiences and the challenges operators face in bridging expertise gaps.

As a CNCF Sandbox project, kagent is gaining strong traction with a dedicated open-source community on GitHub and active discussion channels like CNCF Slack and Discord. This community-driven momentum reflects its promise to redefine how organizations manage complex Kubernetes ecosystems.

Kagent Architecture

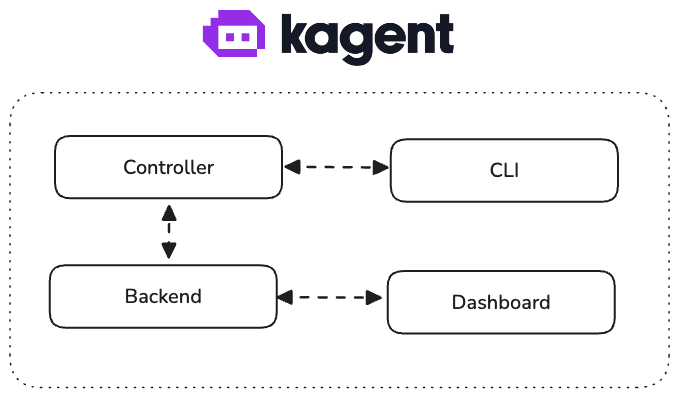

Kagent’s architecture is built around three primary components:

- Controller: A Kubernetes controller written in Go, managing custom resource definitions (CRDs) for agents and tools.

- Engine: A Python-based application responsible for running the agent’s conversational and reasoning loop, built atop Google’s ADK framework.

- CLI and Dashboard (UI): Developers can interact with agents via a CLI or a web-based dashboard, both of which integrate deeply with Kubernetes for resource management.

Agents are described declaratively using YAML manifests, specifying models (such as GPT-4 or domain-specific LLMs), chosen tools, and operational prompts.

This layered design allows kagent to extend organically by adding new agents and tools or connecting external systems.

Kagent Features

- Kubernetes-Native Deployment: Enables organizations to define, deploy, and manage AI agents using familiar Kubernetes tooling.

- Built-in Tools and MCP Integration: Agents gain access to built-in capabilities such as querying metrics, managing rollouts, and debugging configurations, with support for integrating additional MCP-compatible tools.

- Extensibility: Easily create custom agents and tools for specialized operational tasks.

- End-to-End Observability: Native metrics, logs, distributed tracing, and audit trails support comprehensive system insights.

- AI-Driven Automation: Supports scenarios like alert generation, rollout automation, manifest generation, and more using natural language instructions.

What Kagent Can't Do

While powerful, Kagent has limitations:

- Complex decision-making beyond current LLM capabilities still requires human judgment.

- Critical security or compliance decisions should always involve human oversight.

- Rapidly evolving incidents may require experts for nuanced troubleshooting.

- Deep domain knowledge in specific business systems may be out of scope for general-purpose agents.

In these cases, Kagent acts as an assistant, not a replacement, handing off to experts when necessary.

Looking for Kubernetes experts?

Our team of experts specializes in Kubernetes and cloud native solutions. Dont miss out on the opportunity to connect with our experts.

Cost and Limitations

- As an open-source project, Kagent itself has no licensing costs.

- Running LLMs and AI inference engines involves cloud compute costs that vary by model and usage.

- Resource overhead from running agents inside Kubernetes should be considered.

- The framework is evolving; integration with certain cloud-native tools or LLM providers may require additional setup.

- Success depends on correct prompt engineering and tool configurations.

Kagent in Action

For the hands-on demo with Kagent, please refer to the recorded video walkthrough available below:

In this video, we walk through the complete journey of getting started with Kagent, including:

- Installing Kagent on your Kubernetes environment

- Creating and deploying your first custom AI agent

- Testing the agent with Kubernetes troubleshooting scenarios

- Taking a quick look at KMCP and its role in the ecosystem

- Exploring different supported provider models

- Learning how to define new model configurations, tools, and agents

- Understanding how Kagent works behind the scenes

This step-by-step demo is designed to help you quickly gain practical experience, experiment with Kagent’s capabilities, and understand its flexibility in real-world use cases.

Conclusion

While kagent represents a significant advancement in AI-driven cloud-native operations, it is not a flawless or fully autonomous solution—yet. Deep domain expertise remains crucial to handle edge cases, complex incidents, and environments with nuanced contexts. This is precisely where kagent’s design philosophy shines, aiming to augment human expertise rather than replace it.

By combining cutting-edge agentic AI with real-world operational wisdom, kagent helps organizations close the gap between complexity and reliability. It invites engineers and SREs to experiment, contribute, and harness the future potential of intelligent, collaborative automation in Kubernetes operations.

If you are looking for a custom AI solution to integrate in your cloud native stack, we can help you build a tailored solution that meets your specific needs. Contact us today to learn more.