Introduction

Every day, enormous amounts of text, photos, and videos are uploaded on social media portals; as a result, companies need an efficient method to monitor the content that their platforms pay for, protect their brand and users, improve online visibility, and enhance customer relations. The process of verifying suitable content that people upload to the site is known as content moderation.

As part of the procedure, pre-established rules are applied to monitor the content; if it doesn't comply with the requirements, it is flagged and removed. Various factors may be responsible, such as acts of violence, offensiveness, extremism, nudity, hate speech, copyright violations, and several more. Maintaining the brand's safety and making the platform safe to use are the two main objectives of content moderation. Social media, dating websites and apps, e-commerce sites, markets, forums, and similar platforms frequently use content moderation.

Challenges

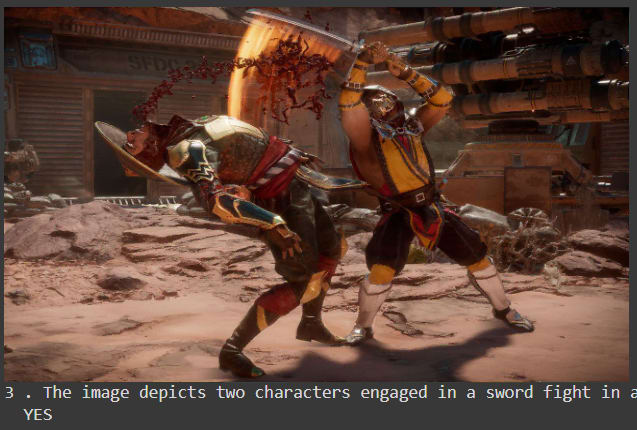

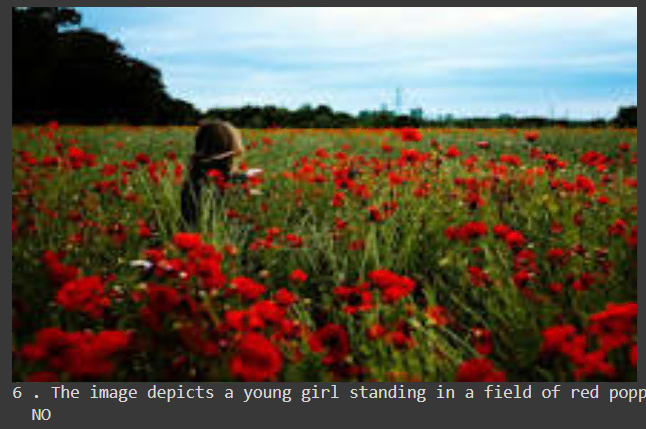

Let’s take an example to understand the challenge for a use case where users are children. We have two images here, only one of them is appropriate for kids.

Will you expose kids to the gore image (first image) on the top? However, second image doesn't evoke any harmful emotions. We have to ensure safety and keep both AI and User Generated Content (UGC) in check. With the use of AI, we are capable of detecting and mitigating gore, toxic, disturbing, or any kind of harmful content.

In the demo section below, we will be showcasing how we can carefully filter out the content that is against the content moderation rules. We will specifically address how inappropriate images can be filtered out using generative AI tools such as LlamaIndex, Microsoft Phi-3 and Moondream2. But there are some constraints that are worth noting. Skip to the demo section from navigation.

As the demand for effective content filtering grows, enterprises are increasingly turning to generative AI to address various business needs, including product cataloging, customer service, and summarization tasks. However, a notable gap persists in leveraging generative AI for enterprise solutions.

-

Data Dependency - The productivity of generative AI models heavily relies on the quality and quantity of training data. This dependency often introduces biases and limitations, hindering the model's ability to provide unbiased and comprehensive solutions.

-

Limited Control - Enterprises commonly utilize pre-existing models developed by external companies, resulting in a lack of control over the underlying data. This lack of control challenges model explainability and customization to specific business requirements.

Although technology simplifies the moderating process, businesses still need to have a balance between effectiveness, efficiency, and user experience. Every moderating strategy has benefits and drawbacks that should be carefully considered depending on the platform's target audience, user base, and the generated content. In the end, a thorough moderating approach aids businesses in upholding a trustworthy and secure online environment.

Limitations of Traditional Methods in Content Moderation

Traditional methods of content moderation, such as manual review, keyword filtering, and rule-based systems, pose several limitations in effectively managing large volumes of data.

- Manual review is time-consuming and labor-intensive, making it unsuitable for dealing with the massive amounts of content generated every day. Decisions on content moderation may also be inconsistent due to human moderators’ difficulties with bias and accuracy when interpreting guidelines.

- Keyword filtering and rule-based systems can automate some aspects of the moderation process, but they are frequently rigid and may fail to detect changing forms of improper content. These methods are constrained by their inability to adapt to new trends or circumstances, resulting in many false positives.

- While platforms grow rapidly and user activity varies, it might be difficult for traditional moderation techniques to keep up, possessing a scalability issue.

- It is possible to unintentionally restrict acceptable information or miss new, dangerous types of content if moderation rules are hardcoded or exclusively depend upon predictive algorithms. These systems lack the flexibility to adapt to evolving user behavior or emerging threats, potentially compromising the platform's effectiveness in maintaining a safe and inclusive environment.

Therefore, after exploring the technicalities of content moderation, it is clear that this task is a complex one. However, in the context of content moderation, what exactly makes it so strong? Let's look at some of the benefits it offers.

Benefits of Generative AI in Content Moderation

Some of the key benefits are:

- Improved Accuracy

- Better Scalability

- Reduction in Manual Moderation and Operational Costs

- Real-time Analysis

- Custom policies

- Enhanced User Experience

- Offers Insights such as Toxicity Score, Sentiment, and Spam Score

- Multi-Lingual Support

Understanding generative AI’s benefits showcases its full potential. As we continue to explore different use cases across different platforms and sectors, we learn how it can be integrated to meet different demands. Considering the huge potential of generative AI, let us look at some use cases of this technology that are helping in transforming content moderation techniques and improving user experience.

Popular use cases of AI Content Moderations

Here are some of the most popular use cases for using AI in content moderation:

-

E-Commerce Sites: Content moderation helps in screening product descriptions, photos, and user reviews for inappropriate or misleading content, ensuring a secure environment.

-

Social media platforms: AI can analyze user-generated content, identify harmful or toxic posts, and limit the spread of misinformation, improving the overall user experience.

-

Online communities and forums: AI-based moderation can help maintain community standards by detecting and removing off-topic, spammy, or inappropriate content.

-

Educational platforms: Content moderation can ensure, educational materials and discussions adhere to appropriate guidelines, creating a safe and conducive learning environment.

-

Gaming moderations: AI can monitor in-game chat and interactions, detecting and mitigating toxic behavior, harassment, or other violations of community rules and promote fair play.

-

News and Media websites: AI-based moderation can help identify and combat the spread of fake news, misinformation, and harmful content, promoting trust and credibility.

-

Government sector: AI-powered content moderation can assist government organizations in managing and moderating public forums, ensuring compliance with regulations and maintaining a respectful and productive dialogue.

-

Output of LLMs - Explainable AI: As the use of LLMs in content moderation increases, it becomes crucial to implement Explainable AI techniques. These techniques provide transparency into the decision-making process, allowing stakeholders to understand and address potential biases or inconsistencies, promoting fairness and accountability in content moderation practices.

Solution

To overcome these limitations discussed in the previous section, using AI in the process offers a possible approach to overcome the shortcomings of conventional content filtering techniques. Platforms can create personalized regulations that are adapted to their particular requirements using automatic moderation powered by AI. This allows for real-time monitoring and response systems that quickly detect and handle unwanted content. Massive text, picture, and video analysis are possible at scale due to AI-driven algorithms, allowing platforms to manage enormous volumes of data effectively while preserving accuracy and consistency.

Additionally, Platforms can learn important insights such as sentiment analysis, toxicity score, spam score, and more, into new issues by examining the patterns and trends in the user-generated material. This enables companies to modify their moderation policies and methods as necessary.

Large Language Models (LLMs), vector databases, and image captioning are some important components that improve AI-powered content moderation systems. Image captioning algorithms use deep learning techniques to produce descriptive captions for photos, which helps platforms comprehend the context and content of images more effectively. This image data is kept in vector databases, which makes it easier to identify improper imagery and conduct effective similarity searches. With the use of LLMs—like GPT-based models—platforms may assess text-based content more accurately and contextually.

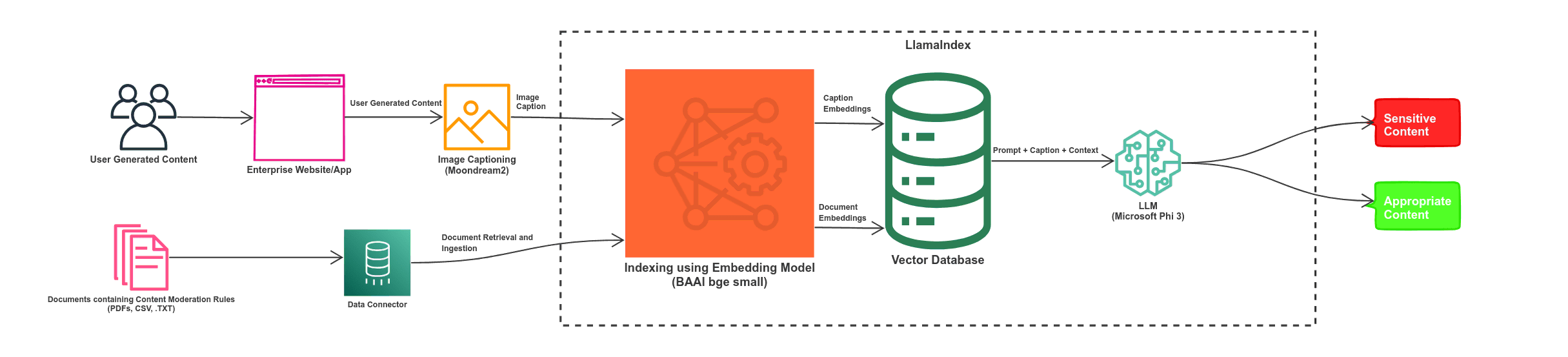

How it Works

Considering we are trying to moderate images in our use case, we will proceed as follows:

-

Processing images and extracting features using techniques like image captioning with SLMs such as moondream to understand the visual and textual content.

-

Indexing user-generated content in a vector database like LlamaIndex, allowing quick retrieval and informed decision-making. The database contains information that violates moderation rules, customizable by users.

-

Using LLMs and custom algorithms to assess the appropriateness of text-based content (descriptions, comments, captions) against preset moderation guidelines for offensive material like violence, nudity, and hate speech. Operators can fine-tune policies and sensitivity thresholds.

-

Real-time monitoring of user interactions with AI-powered algorithms to flag inappropriate or potentially harmful content for human moderators, limiting the spread of misinformation and harassment.

-

Finally, To guarantee seamless operation at scale, systems can make use of distributed computing, parallel processing, optimization approaches, or leveraging solutions offered by us.

Demo

We will be demonstrating the usage of Generative AI for analyzing images and classifying them as inappropriate and appropriate using open-source models.

Firstly, let's make sure we have installed all prerequisites.

!pip install llama-index llama-index-llms-huggingface llama-index-embeddings-instructor llama-index-embeddings-huggingface sentence_transformers torch torchvision "transformers[torch]" "huggingface_hub[inference]"

PythonNext, set up our workspace and get ready for the content moderation task.

from llama_index.core import VectorStoreIndex, SimpleDirectoryReader

from llama_index.embeddings.huggingface import HuggingFaceEmbedding

from llama_index.core import Settings

from llama_index.llms.huggingface import (

HuggingFaceInferenceAPI,

HuggingFaceLLM,

)

from transformers import AutoModelForCausalLM, AutoTokenizer

from PIL import Image

import os

from os import path

PythonIn this example, we'll use the Moondream2, an image captioning model available on HuggingFace, to analyze an image. Moondreamv2 is a small vision model designed to run efficiently on edge devices.

# Load pre-trained Moondream2 model and tokenizer

model_id = "vikhyatk/moondream2"

revision = "2024-04-02"

model = AutoModelForCausalLM.from_pretrained(

model_id, trust_remote_code=True, revision=revision

)

tokenizer = AutoTokenizer.from_pretrained(model_id, revision=revision)

# Load an image for processing

image = Image.open(<IMAGE_PATH>)

PythonOnce the model is loaded, we will generate the captions of all the images and then save them in a folder named data_folder as a .txt file for easy retrieval.

# Caption the image

image = Image.open(<IMAGE_PATH>)

enc_image = model.encode_image(image)

image_caption = model.answer_question(enc_image, "Describe this image in detail with transparency.", tokenizer)

print(image_caption)

#Make data_folder directory

if path.exists('/content/data_folder') == False:

os.mkdir('/content/data_folder')

#Store it in txt format

text_file = open(r"./data_folder/image_caption.txt", "w")

text_file.write(image_caption)

text_file.close()

PythonFor this Demo, we are using two types of images: one that may be flagged as inappropriate and another one is not flagged.

Next, let's prepare data for indexing. We'll use LlamaIndex for indexing the documents that contain content moderation rules. For this demo, we are AEGIS AI Content Dataset by NVIDIA from Hugging Face, which includes all the text-based content considered inappropriate. We need to upload this dataset along with the captions of the images in a directory /content/data_folder.

# Load documents for indexing

loader = SimpleDirectoryReader(input_dir="/content/data_folder")

documents = loader.load_data()

# Initialize embedding model and index

embedding_model = HuggingFaceEmbedding(model_name="BAAI/bge-small-en-v1.5")

index = VectorStoreIndex.from_documents(

documents,

embed_model=embedding_model,

)

PythonThis data will help LLM in referencing the documents during inferencing, making it context-aware.

Now that setup is complete, let's move on to a quick use case implementation. We'll employ LLM to decide whether an image is offensive based on the generated caption. We are using the Microsoft Phi-3 model as LLM taken from the Hugging Face library, which is a small text-to-text model designed for efficient inferencing.

#Loading model using Hugging Face Inference API

remotely_run = HuggingFaceInferenceAPI(model_name="microsoft/Phi-3-mini-4k-instruct”)

#Setting tokenizer and llm

Settings.tokenzier = tokenizer

Settings.llm = remotely_run

#Enabling data search

query_engine = index.as_query_engine()

PythonNow we have to let the LLM decide based on the description, prompt, and provided documents on whether the following images can be flagged as inappropriate or not.

# Perform moderation decision using LLM

wrapper= "Based on the knowledge of the document, tell whether the caption of image is sensitive or not? :" + image_caption

response = query_engine.query(wrapper)

print(response)

PythonConsidering our sample images, we have the following results:

Upon inference, the LLM correctly flags the content as shown above.

This demo illustrates how we may utilize AI-driven models and frameworks to assess user-generated material and decide on moderation in real time.

To understand and customize the content moderation system better, the next steps would be to look at more complicated situations, use cases, interactive examples, and documentation.

Conclusion

Content moderation is an essential component of any organization trying to preserve safety, trust, and brand integrity in the quickly changing digital landscape. Strong moderation solutions are more important than ever due to the exponential rise of user-generated material on various platforms. Thankfully, the introduction of artificial intelligence (AI) has completely changed how businesses handle content filtering. This is especially true for powerful tools like Large Language Models (LLMs) and sophisticated image processing algorithms.

Businesses can benefit from a variety of advantages by adopting AI-driven moderation solutions, from improved accuracy and scalability to lower operating expenses. Adaptable rules, in-the-moment analysis, and intuitive data analytics enable enterprises to proactively counter new risks and guarantee effective user experiences. Furthermore, AI-powered moderation has become more flexible and accessible across a range of industries with the help of accessible frameworks and APIs like LlamaIndex.

Enhance your content moderation with AI-driven solutions for improved accuracy, scalability, and cost efficiency.

Would you like us to work on a PoC for your use case? Share your email below to discuss with our team.